Today, we’re announcing our official integration with Databricks. Here’s how it works—and what it means.

What is Databricks?

Databricks is a unified, open, web-based platform for working with Spark. Its multicloud infrastructure provides automated cluster management and IPython-style notebooks to unify data warehousing, AI, and analytics in a single platform.

The Databricks Data Lakehouse brings the best of both worlds: the reliability, governance, and performance of data warehouses with the openness, flexibility, and ML capabilities of data lakes. The Data Lakehouse efficiently handles all data types while applying a consistent security and governance approach.

Unifying data in a single platform fulfills the needs of all your data teams—engineering, analytics, BI, data science, and ML—and beautifully simplifies your modern data stack.

What is Cube?

Cube’s universal semantic layer makes lakehouse data consistent, performant, and accessible to every downstream application. We do this with data modeling that defines your metrics up-stack.

Cube’s advanced pre-aggregation capabilities powerfully condense frequently-used queries to optimize response times. Cube then provides this data to every application with GraphQL, REST, and SQL APIs so that every tool—and every data consumer—can work with the same data.

The Cube and Databricks Integration

By combining Databricks’ unified analytics and data store with Cube’s data modeling, access control, caching, and APIs, it’s now possible to build a new dashboard or embed analytics features into customer-facing apps in minutes—not weeks.

Select Cube and Databricks users already have been enjoying this integration in production for use cases like creating dashboards for internal analytics. Here’s one example: by consuming operational data with Kafka, transforming it into insights with Databricks, then using Cube as an API and caching layer to power visualization layers, users have been able to find actionable insights and identify opportunities to optimize their operations.

It’s easy to connect a workspace to Cube using Databricks’ SQL endpoint. Here’s how.

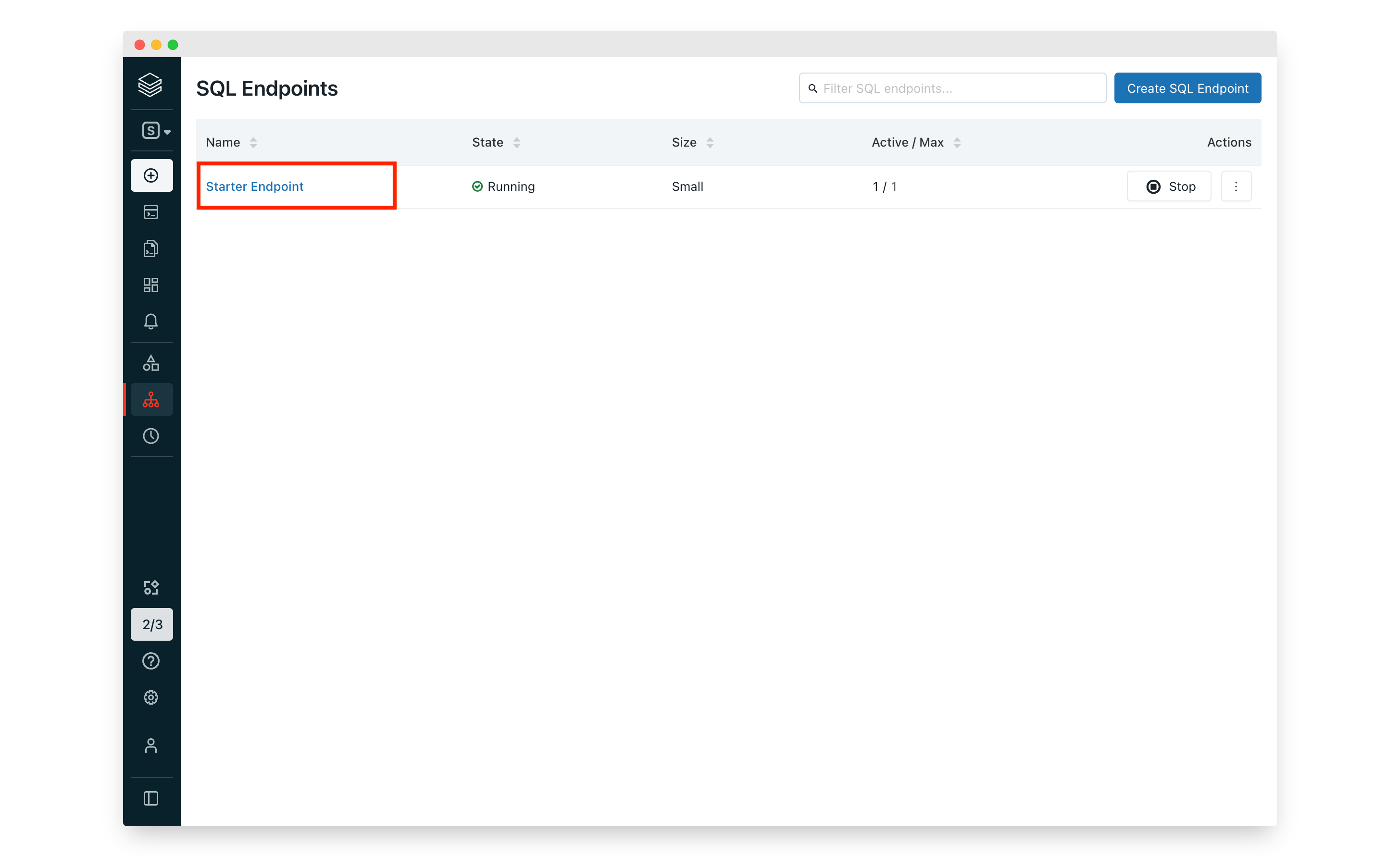

For starters, we recommend using Cube with the Databricks SQL Endpoint. Follow Databricks’ docs to create a SQL Endpoint.

Creating a new SQL endpoint

- Log in to your Databricks Workspace account.

- Navigate to the SQL Console on the left hand bar and select SQL from the drop-down menu.

- Click API Endpoints, and then on the top right, select +New SQL Endpoint.

- Next, name your endpoint and choose a Cluster Size. Make sure to set the Auto Stop to at least 60-minutes of no activity, then click Create.

After you’ve configured data access on the SQL Endpoint, it’s time to get your credentials for the SQL Endpoint.

- In the Databricks SQL Console, go to Endpoints and click on the endpoint you created.

- Navigate to Connection Details and copy the HTTP Path, Port, and Server Hostname:

Once you have your credentials, generate a Personal Token.

- Navigate to the Admin Console, click on Settings, and select User Settings.

- Toggle over Personal Access Tokens and click on +Generate New Token.

- Name your token and set the expiration to “Lifetime”, then click Generate.

- Copy the generated JDBC URL.

- Attach your newly-generated personal token to the JDBC URL you copied in step 6, e.g.

jdbc:spark://dbc-xxxxxxxx-3957.cloud.databricks.com:443:443/default;transportMode=http;ssl=1;AuthMech=3;httpPath=/sql/1.0/endpoints/0b27a1dfc18f82ae;UID=token;PWD=PERSON-TOKEN - Finally, select the database you’d like to query with Cube. In our example, we’ll use “default”.

Connecting Databricks to Cube

- Sign into Cube Cloud and use the wizard to create a new deployment. Select Databricks from the database connection list.

- Enter your Databricks JDBC URL and personal token as well as your database name to connect.

- If needed, copy Cube Cloud’s IP addresses and add them to your Databricks allow-list. (Databricks’ documentation for IP access lists is here.)

That’s it! Now you are ready to use Cube on top of your Databricks to model your data, manage security rules, apply caching, and expose your data definitions to any downstream application via Cube’s REST, GraphQL, or SQL APIs.

You can check out more examples of integrations with visualization tools on our website.

Try the Cube and Databricks Integration for yourself

To follow the tutorial above and experience everything this integration offers, check out our docs—and, if you don’t already have one, sign up for a free Cube Cloud account.

Tell us what you think

We’d love to hear from you, learn what you’re building, and strategize how to help. Find us via Cube’s Slack and GitHub communities—or schedule a time to chat 1:1.